Welcome to EaseRobot, a cutting-edge autonomous robot designed to revolutionize home automation. This innovative DIY robotics project aims to create a sophisticated house-bot that can navigate and interact with its environment. In this series, we'll delve into the project's details, starting with the concept, hardware selection, and initial software development.

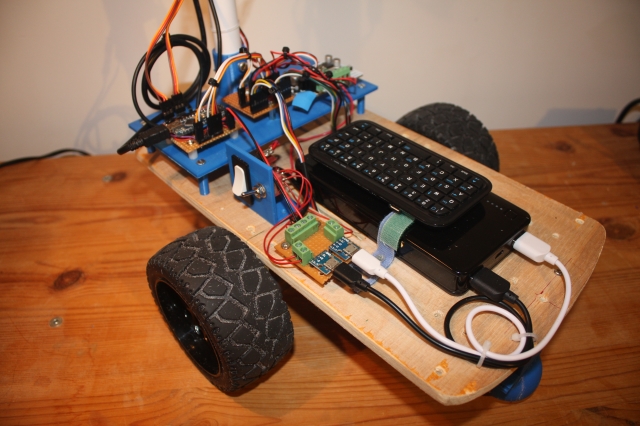

Inspired by the possibilities of modern robotics, EaseRobot is built around a Raspberry Pi 3, Model B, leveraging its processing power and versatility. By harnessing the capabilities of the Robot Operating System (ROS) and Raspberry Pi, we can focus on developing the robot's features rather than building a custom processor board from scratch.

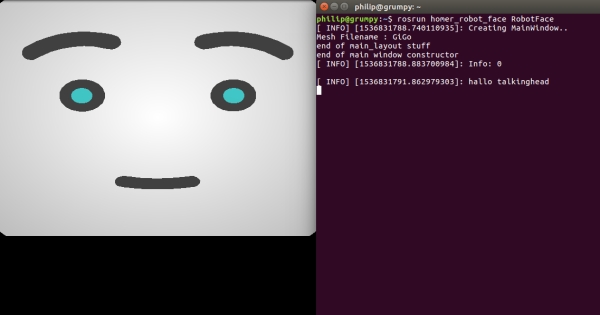

EaseRobot is designed to perform various tasks, including facial recognition, speech synthesis, and autonomous locomotion. Our robot will be equipped with a 7" touchscreen display and a camera module, enabling it to interact with users and navigate its surroundings. With ROS, we can develop and test nodes for the system, simulate the robot's behavior, and refine our code.

In this project, we'll explore various "missions" that EaseRobot can perform, starting with the ability to take messages to specific individuals. We'll break down this mission into smaller design goals, including face recognition, speech synthesis, locomotion control, and navigation. Join us on this exciting journey as we bring EaseRobot to life and explore the possibilities of autonomous robotics.

Getting Started

First installment in a series on a ROS-based Smart Home Bot

Introduction

The EaseRobot project is an innovative DIY robotics endeavor aimed at designing and building a sophisticated autonomous house-bot. This article marks the beginning of a series that will delve into the project's details. In this initial part, we'll introduce the concept, select a suitable single-board computer, install ROS (Robot Operating System), and develop the initial control software.

Background

In the late 1970s and early 1980s, I was inspired by two influential books: "How to build your own self-programming robot" by David L. Heiserman and "How to build a computer-controlled robot" by Tod Loofbourrow. The original plan was to design a custom processor board based on a Z80 processor and then build a robot around it. Unfortunately, the project never took off. Fast-forward to today, with the advent of compact boards like the Raspberry Pi and Arduino, creating a home robot has become significantly more accessible, although our expectations of its capabilities have increased dramatically.

As a nod to one of these books, our robot is named EaseRobot.

Unlike in the early 80s, we're fortunate to have a wide range of options available. EaseRobot will be built around a Raspberry Pi 3, Model B with 1GB of RAM, making it easier to focus on the robot's development rather than building a processor board from scratch.

Harnessing the Power of ROS and Raspberry Pi

I'll delve into how I've leveraged ROS in the EaseRobot project, highlighting how I've utilized various ROS tools to test and refine my code. While this isn't a comprehensive ROS tutorial, I'll provide essential ROS terms and concepts to facilitate a smooth read. For in-depth tutorials, I recommend exploring the ROS Wiki.

To begin with, here are some key ROS concepts:

- ROS is a distributed system, enabling robot code to run on multiple machines that communicate over a network.

- A node is a single-purpose executable that performs a specific task.

- Nodes are organized into packages, which are collections of folders and files.

- Nodes can be written in multiple languages, including C++ and Python, which we'll use in this project.

- Nodes communicate with each other using Topics, which are one-way streams of data.

- Topics are instances of Messages, which are data structures that can be standard or user-defined.

- Nodes can also communicate using Services, a server/client blocking protocol, and Actions, a non-blocking goal-oriented task protocol.

- The master node, roscore, is the central hub that all other nodes register with, ensuring seamless communication.

- ROS utilizes a catkin build system and provides various tools for examining and simulating the system.

- Individual nodes can be run using the rosrun command or the launch tool, which enables starting multiple nodes from a single command terminal.

- ROS includes a parameter server, allowing nodes to store and retrieve parameters during runtime.

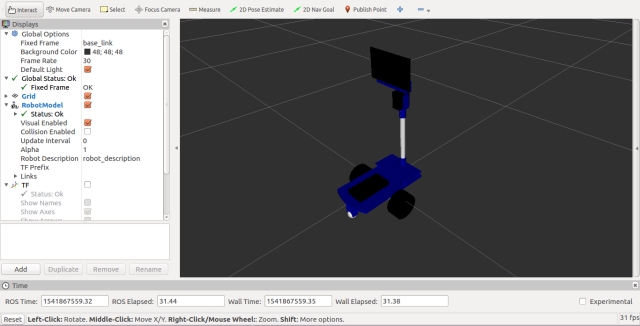

With the decision to use a Raspberry Pi 3 as the main processor and ROS, the first step is to install ROS on the Pi. To simplify the process, I'll use an Ubuntu image for the Raspberry Pi that includes ROS, available for free from the Ubiquity Robotics website. This image features the Kinetic version of ROS and includes useful ROS packages, such as the raspicam_node for accessing the Raspberry Pi camera.

Other Raspberry Pi peripherals I plan to use in the EaseRobot project include:

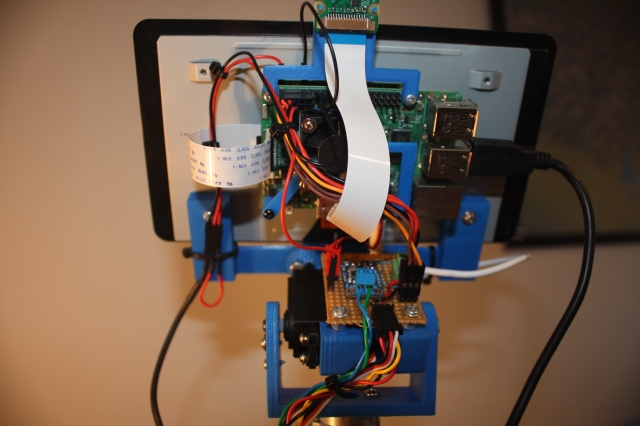

- 7" Touchscreen Display

- Camera Module V2

The display will be used to convey status information, web content, and an animated robot face to the user. The camera will serve as the robot's eyes, initially used for facial recognition.

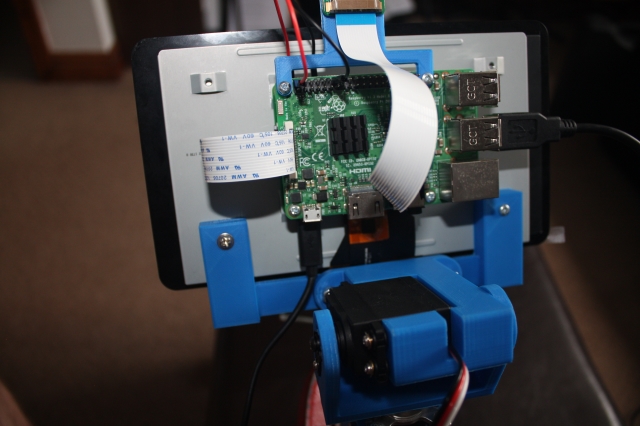

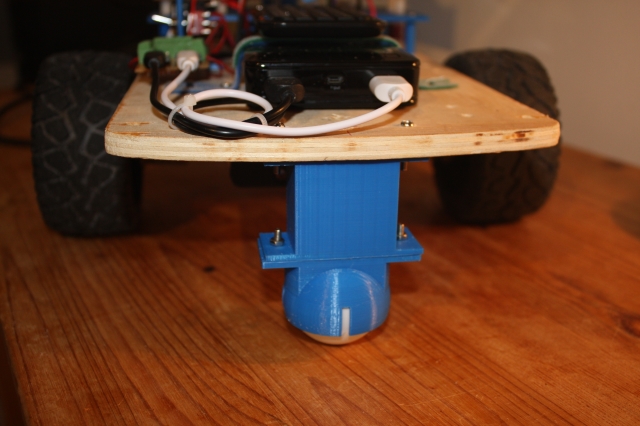

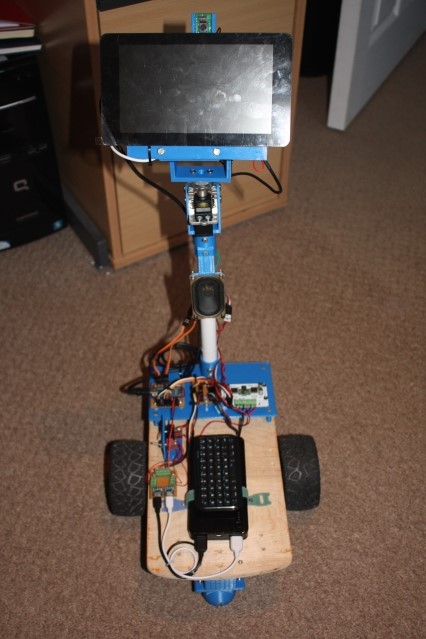

The following images show the 7" display with the Raspberry Pi and camera mounted on the rear of the screen. The camera is mounted using a 3D printed bracket, with the stl file available in the 3D print zip file included with this article.

As ROS can run across a distributed network, I've also installed ROS on an Ubuntu desktop. This desktop PC will be used to develop nodes for the system, run ROS tools to test the code, and simulate the robot's behavior.

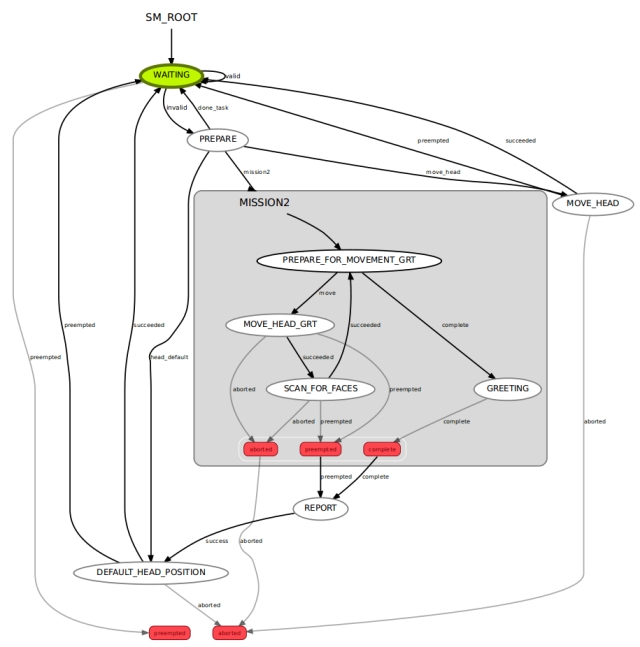

Robotic Missions

To define the requirements for the EaseRobot project, I'll outline some "missions" that I'd like EaseRobot to perform. Inspired by the article "Let's build a robot!", one of the tasks I'd like EaseRobot to accomplish is:

Take a message to... - Since EaseRobot will have the ability to recognize family members, how about the ability to make it the'message taker and reminder'? I could say 'EaseRobot, remind (PersonName) to pick me up from the station at 6pm'. Then, even if that household member had their phone turned off or were listening to loud music, EaseRobot could navigate through the house, find the person, and deliver the message.

This sounds like a great starting point and will be our first mission. I'll modify it slightly, though. What if you could access EaseRobot using a web browser to control and set missions?

Let's break down the "Take a message to..." mission into several smaller design goals that can be worked on and completed individually. The design goals for this mission will be:

Design Goal 1: To be able to look around using the camera, search for faces, attempt to identify any people seen, and display a message for any identified.

Design Goal 2: Facial expressions and speech synthesis. EaseRobot will need to be able to deliver the message.

Design Goal 3: Locomotion controlled by a remote keyboard and/or joystick.

Design Goal 4: Addition of a laser ranger finder or similar ranging sensor used to aid navigation.

Design Goal 5: Autonomous locomotion.

Design Goal 6: Task assignment and completion notification.

That's quite a list of things to accomplish for what seems like a simple mission for a robot.

Mission 1, Design Goal 1

To accomplish this design goal, we will need to:

- Control the head/camera using RC servos for pan/tilt movement.

- Access images from the Raspberry Pi Camera.

- Detect and recognize faces.

- Control the order of these actions.

For the remainder of this first article, I'll concentrate on the pan/tilt control of the head/camera.

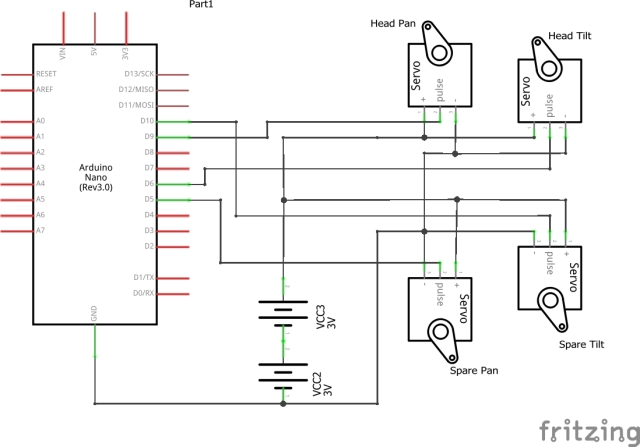

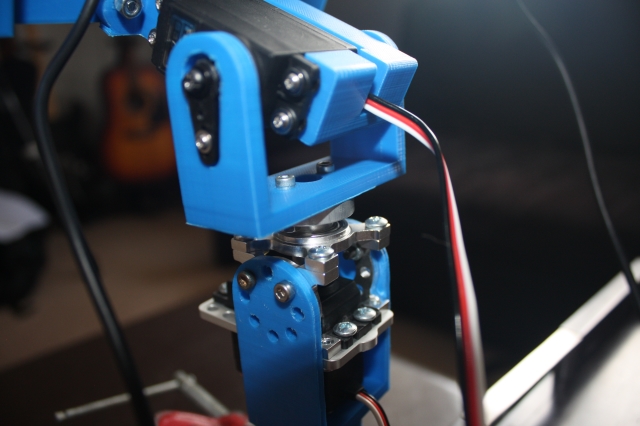

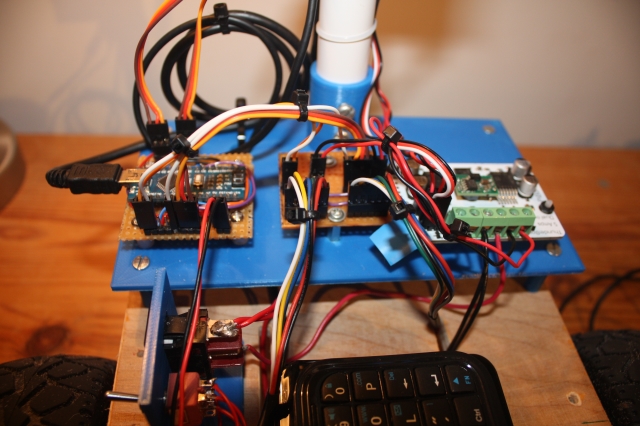

To control the head/camera, we need a pan and tilt device which will require two RC servos. I'm also going to include a second pan/tilt device for future expansion. We therefore require four PWM outputs to control the servos. The Raspberry Pi only has one hardware PWM, and although we could make use of software PWMs, I'm going to avoid that overhead by passing control of the servos off to a second board.

We could use a purpose-built board like the one available from PiBorg, the UltraBorg. Using this board, you can connect up to four servos and four HC-SR04 ultrasonic devices to the Raspberry Pi using an I2C bus. However, since I have a number of Arduino Nano's available from a previous project, I'm going to make use of one of those.

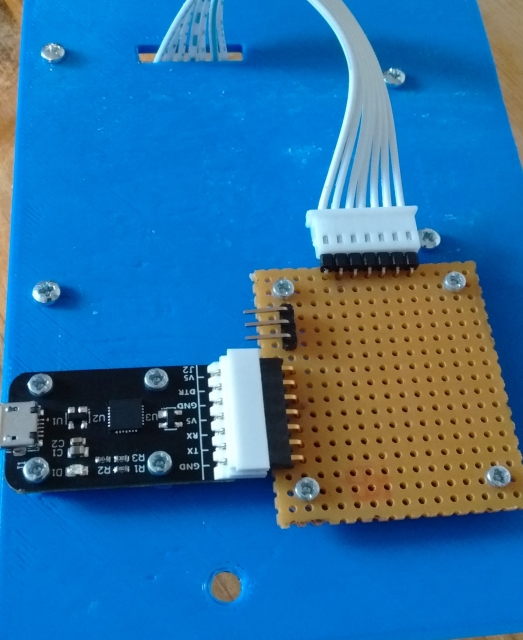

This is also going to be our first of many examples in taking advantage of work already carried out by the ROS community, allowing us to concentrate on the robot application. To attach to the ROS-like node which will be running on the Arduino, we are going to use a package that includes a node for communicating with the Arduino over the serial port and an Arduino library for use in the Arduino sketch. This package documentation is available on the ROS Wiki website rosserial_arduino.

To utilize this package, we'll need to install it on the ROS target and integrate the library into the Arduino IDE environment. Additionally, we'll need to rebuild the Arduino library if we define custom ROS messages (which we will). The rosserial Arduino tutorials provide a comprehensive guide on how to accomplish this and more.

To control the position of each servo comprising the pan/tilt devices, we'll develop a ROS package with a node that takes pan/tilt demand messages and converts them into individual position messages sent to the Arduino. The first message will specify which joints to move and their required positions. The second message, sent to the Arduino, will contain an index value indicating which of the four servos to move and the angle to which it should be moved. By breaking down this functionality, the Arduino sketch only needs to understand servo control, making it reusable for other servo applications. Note that in Arduino programming, the code running on the Arduino is referred to as a sketch, which I'll continue to use throughout this tutorial.

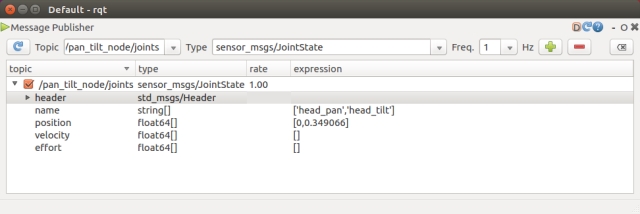

For the initial message, which specifies the joint positions, we'll utilize the ROS predefined message sensor_msgs/JointState. You can find the documentation for this message type here. As per ROS standards, the position units are radians, so our node will need to convert the position to degrees for the Arduino. The message also includes several fields that we won't be using. Although using this message type might seem excessive, adhering to ROS standards and leveraging existing message types will enable us to tap into valuable ROS tools later in the project.

The second message, which identifies the servo to move and the angle in degrees, will be a custom message to avoid unnecessary overhead in the Arduino sketch.

We could include the definition of our custom messages in the pan-tilt package, but to promote reuse, we'll create a separate package for the message definitions.

To complete the pan-tilt functionality, we'll develop two ROS packages and a ROS-style Arduino sketch.

We'll call the first package servo_msgs, which will define our custom message. Upon building, it will generate.h files for use by C++ code and automatically create Python scripts. We'll also recompile the Arduino library to produce.h files that will be used by our sketch.

The files comprising this first package are available in the servo_msgs folder. The root of this folder contains a readme file documenting the package, along with two files that are required in every ROS package: CmakeList.txt and package.xml. You can find information about these files in the tutorial on creating ROS packages.

The msg folder within the package contains the definition file for our message, servo_array.msg:

# index references the servo that the angle is for, e.g. 0, 1, 2 or 3

# angle is the angle to set the servo to

uint8 index

uint16 angle

Imagine this as a structured data format, similar to C. This message will be transmitted as a ROS topic to the Arduino, containing two essential elements: the index, which specifies the servo to be moved, and the angle, which defines the degree to which the servo should be rotated.

This concludes our first straightforward ROS package. Our second package is the pan_tilt package, located in the pan_tilt folder, which comprises executable code that will form the pan_tilt_node.

The root folder of this package includes a documentation file, as well as the CmakeList.txt and package.xml files. This package features several subfolders, which I'll briefly outline. The config folder contains the config.yaml file, which will be utilized by the launch file (discussed below) to set specific parameters in the parameter server. This enables us to configure the system without requiring code recompilation.

# In Rodney index0 is for the head and index 1 is spare

servo:

index0:

pan:

servo: 0

joint_name: 'head_pan'

tilt:

servo: 1

flip_rotation: true

max: 0.349066

min: -1.39626

joint_name: 'head_tilt'

index1:

pan:

servo: 2

tilt:

servo: 3

In this configuration file, index0 specifies parameters for the head pan and tilt device, while index1 corresponds to the second pan and tilt device. The parameters are defined as follows:

- servo: identifies the servo responsible for the joint

- joint_name: specifies the name of the joint in the joint_state message

- flip_rotation: (explained below)

- max and min: defined in radians, these values restrict the joint's travel range

According to ROS convention, joints follow the right-hand rule, increasing their value in an anticlockwise direction around a positive axis. However, in Rodney's construction, the head tilt servo is mounted to follow the left-hand rule. By setting flip_rotation to true, our system can adhere to the convention while ensuring the pan_tilt_node passes correct values to the Arduino for the servo's orientation.

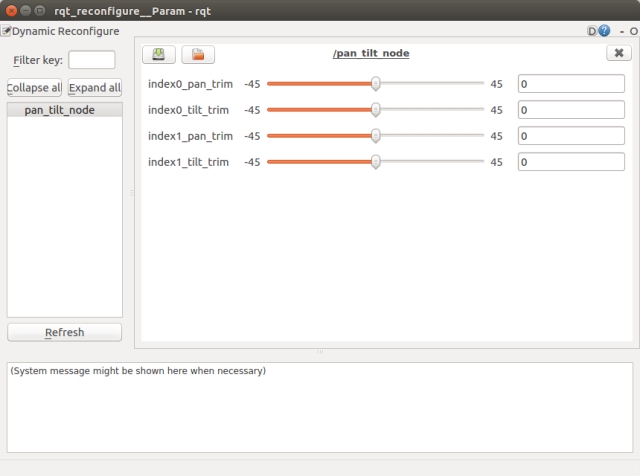

The cfg folder contains the pan_tilt.cfg file, which is used by the dynamic reconfiguration server to adjust servo trim on the fly. As seen, this file is a Python script.

#!/usr/bin/env python

PACKAGE = "pan_tilt"

from dynamic_reconfigure.parameter_generator_catkin import *

gen = ParameterGenerator()

gen.add("index0_pan_trim", int_t, 0, "Index 0 - Pan Trim", 0, -45, 45)

gen.add("index0_tilt_trim", int_t, 0, "Index 0 - Tilt Trim", 0, -45, 45)

gen.add("index1_pan_trim", int_t, 0, "Index 1 - Pan Trim", 0, -45, 45)

gen.add("index1_tilt_trim", int_t, 0, "Index 1 - Tilt Trim", 0, -45, 45)

exit(gen.generate(PACKAGE, "pan_tilt_node", "PanTilt"))

For a comprehensive understanding of the dynamic reconfiguration server, refer to the ROS Wiki section on dynamic reconfiguration. In our file, we add four parameters, one for each servo, with default values set to zero and minimum/maximum values set to -45 and 45, respectively.

The launch folder contains launch files that enable us to load configuration files and start all nodes that comprise a system. Our folder includes a pan_tilt_test.launch file for testing the pan/tilt part of the Rodney system. This is an XML-formatted file.

<?xml version="1.0"?>

<launch>

<rosparam command="load" file="$(find pan_tilt)/config/config.yaml" />

<node pkg="pan_tilt" type="pan_tilt_node" name="pan_tilt_node" output="screen" />

<node pkg="rosserial_python" type="serial_node.py" name="serial_node"

output="screen" args="/dev/ttyUSB0" />

</launch>

For a complete understanding of launch files, refer to the ROS Wiki section on launch files. Our launch file first loads the config file.

<rosparam command="load" file="$(find pan_tilt)/config/config.yaml" />

The next tag executes our pan_tilt_node, directing logging messages to the terminal.

<node pkg="pan_tilt" type="pan_tilt_node" name="pan_tilt_node" output="screen" />

The final tag runs the rosserial node, which communicates with the Arduino, selecting the serial port connected to the Arduino.

<node pkg="rosserial_python" type="serial_node.py" name="serial_node"

output="screen" args="/dev/ttyUSB0" />

The remaining folders, include and src, contain the C++ code for the package. Our package has one C++ class, PanTiltNode, and a main routine within the pan_tilt_node.cpp file.

The main routine initializes our node, creates an instance of our class, passes a callback function to the dynamic reconfiguration server, and hands control to ROS spin, which handles incoming and outgoing topics.

int main(int argc, char **argv)

{

ros::init(argc, argv, "pan_tilt_node");

PanTiltNode *pan_tiltnode = new PanTiltNode();

dynamic_reconfigure::Server<pan_tilt::PanTiltConfig> server;

dynamic_reconfigure::Server<pan_tilt::PanTiltConfig>::CallbackType f;

f = boost::bind(&PanTiltNode::reconfCallback, pan_tiltnode, _1, _2);

server.setCallback(f);

std::string node_name = ros::this_node::getName();

ROS_INFO("%s started", node_name.c_str());

ros::spin();

return 0;

}

The constructor for our class loads parameters from the parameter server setup by our configuration file.

// Constructor

PanTiltNode::PanTiltNode()

{

double max_radians;

double min_radians;

int temp;

/* Get any parameters from server which will not change after startup.

* Defaults used if parameter is not in the parameter server

*/

// Which servo is used for what

n_.param("/servo/index0/pan/servo", pan_servo_[0], 0);

n_.param("/servo/index0/tilt/servo", tilt_servo_[0], 1);

n_.param("/servo/index1/pan/servo", pan_servo_[1], 2);

n_.param("/servo/index1/tilt/servo", tilt_servo_[1], 3);

// Check for any servos mounted the opposite rotation of the right-hand rule

n_.param("/servo/index0/pan/flip_rotation", pan_flip_rotation_[0], false);

n_.param("/servo/index0/tilt/flip_rotation", tilt_flip_rotation_[0], false);

n_.param("/servo/index1/pan/flip_rotation", pan_flip_rotation_[1], false);

n_.param("/servo/index1/tilt/flip_rotation", tilt_flip_rotation_[1], false);

/* Maximum and Minimum ranges. Values stored on parameter server in

* radians and RH rule as per ROS standard. These need converting

* to degrees and may need flipping.

*/

n_.param("/servo/index0/pan/max", max_radians, M_PI/2.0);

n_.param("/servo/index0/pan/min", min_radians, -(M_PI/2.0));

pan_max_[0] = (int)signedRadianToServoDegrees(max_radians, pan_flip_rotation_[0]);

pan_min_[0] = (int)signedRadianToServoDegrees(min_radians, pan_flip_rotation_[0]);

if(true == pan_flip_rotation_[0])

{

temp = pan_max_[0];

pan_max_[0] = pan_min_[0];

pan_min_[0] = temp;

}

//... (rest of the code)

// Joint names

n_.param<std::string>("/servo/index0/pan/joint_name",

pan_joint_names_[0], "reserved_pan0");

n_.param<std::string>("/servo/index0/tilt/joint_name",

tilt_joint_names_[0], "reserved_tilt0");

n_.param<std::string>("/servo/index1/pan/joint_name",

pan_joint_names_[1], "reserved_pan1");

n_.param<std::string>("/servo/index1/tilt/joint_name",

tilt_joint_names_[1], "reserved_tilt1");

first_index0_msg_received_ = false;

first_index1_msg_received_ = false;

// Published topic is latched

servo_array_pub_ = n_.advertise<servo_msgs::servo_array>("/servo", 10, true);

// Subscribe to topic

joint_state_sub_ = n_.subscribe("/pan_tilt_node/joints",

10, &PanTiltNode::panTiltCB, this);

}

The calls to param read the parameter from the server if it is available, otherwise, the default value is used.

n_.param("/servo/index0/pan_servo", pan_servo_[0], 0);

The last two lines of the constructor subscribe to the topic and advertise which topics our node will be publishing. The subscribe call is passed the callback function to be called when the topic arrives.

Our callback function is called panTiltCB.

// Callback to move the joints

void PanTiltNode::panTiltCB(const sensor_msgs::JointState& joint)

{

bool index0 = false;

bool index1 = false;

/* Search the list of joint names in the message. Although we expect pan/tilt

* values for one device, a JointState message may contain data for one joint

* or all four joints. The position (rotation) values are signed radians and

* follow the right-hand rule. Values to be converted from signed radians to

* degrees and for the servo orientation. Pan/tilt values are also stored in

* case we change the trim.

*/

for (unsigned int i = 0; i < joint.name.size(); i++)

{

// Is it one of the pan or tilt joints

if(pan_joint_names_[0] == joint.name[i])

{

// Index 0 pan

index0_pan_ = (int)signedRadianToServoDegrees

(joint.position[i], pan_flip_rotation_[0]);

index0 = true;

}

else if(pan_joint_names_[1] == joint.name[i])

{

// Index 1 pan

index1_pan_ = (int)signedRadianToServoDegrees

(joint.position[i], pan_flip_rotation_[1]);

index1 = true;

}

else if(tilt_joint_names_[0] == joint.name[i])

{

// Index 0 tilt

index0_tilt_ = (int)signedRadianToServoDegrees

(joint.position[i], tilt_flip_rotation_[0]);

index0 = true;

}

else if (tilt_joint_names_[1] == joint.name[i])

{

// Index 1 tilt

index1_tilt_ = (int)signedRadianToServoDegrees

(joint.position[i], tilt_flip_rotation_[1]);

index1 = true;

}

}

if(index0 == true)

{

first_index0_msg_received_ = true;

movePanTilt(index0_pan_, index0_tilt_, index0_pan_trim_, index0_tilt_trim_, 0);

}

if(index1 == true)

{

first_index1_msg_received_ = true;

movePanTilt(index1_pan_, index1_tilt_, index1_pan_trim_, index0_tilt_trim_, 1);

}

}

The callback function iterates through the names in the received message, searching for a known joint name. If a name is found, the associated position value is converted from the ROS standard and orientation to a value representing degrees on the servo using the signedRadianToServoDegrees helper function.

The callback then calls the function movePanTilt. This function adds in the trim offset for the relevant pan and tilt servos, checks if the range should be limited, and then publishes the two messages with the servo index and position. The two messages published are of the same type, one is for the relevant pan servo and the second is for the relevant tilt servo.

void PanTiltNode::movePanTilt(int pan_value, int tilt_value,

int pan_trim, int tilt_trim, int index)

{

int pan;

int tilt;

servo_msgs::servo_array servo;

pan = pan_trim + pan_value;

tilt = tilt_trim + tilt_value;

pan = checkMaxMin(pan, pan_max_[index], pan_min_[index]);

tilt = checkMaxMin(tilt, tilt_max_[index], tilt_min_[index]);

// Send message for pan position

servo.index = (unsigned int)pan_servo_[index];

servo.angle = (unsigned int)pan;

servo_array_pub_.publish(servo);

// Send message for tilt position

servo.index = (unsigned int)tilt_servo_[index];

servo.angle = (unsigned int)tilt;

servo_array_pub_.publish(servo);

}

There are two helper functions. The first is used to check for the max/min range.

int PanTiltNode::checkMaxMin(int current_value, int max, int min)

{

int value = current_value;

if (value > max)

{

value = max;

}

if (value < min)

{

value = min;

}

return (value);

}

The second helper function is used to convert the ROS standard units and orientation for rotation to those required by the servo.

// Converts a signed radian value to servo degrees. 0 radians is 90 degrees.

double PanTiltNode::signedRadianToServoDegrees(double rad, bool flip_rotation)

{

double retVal;

if(true == flip_rotation)

{

retVal = ((-rad/(2.0*M_PI))*360.0)+90.0;

}

else

{

retVal = ((rad/(2.0*M_PI))*360.0)+90.0;

}

return retVal;

}

The dynamic parameter server callback stores each of the trim parameters and then makes two calls to movePanTilt, one for each pan/tilt device, with the last position value and the latest trim values.

// This callback is for when the dynamic configuration parameters change

void PanTiltNode::reconfCallback(pan_tilt::PanTiltConfig &config, uint32_t level)

{

index0_pan_trim_ = config.index0_pan_trim;

index0_tilt_trim_ = config.index0_tilt_trim;

index1_pan_trim_ = config.index1_pan_trim;

index1_tilt_trim_ = config.index1_tilt_trim;

// We don't want to send a message following a call here unless we have received

// a position message. Otherwise the trim value will be taken for an actual position.

if(first_index0_msg_received_ == true)

{

// Send new messages with new trim values

movePanTilt(index0_pan_, index0_tilt_, index0_pan_trim_, index0_tilt_trim_, 0);

}

if(first_index1_msg_received_ == true)

{

movePanTilt(index1_pan_, index1_tilt_, index1_pan_trim_, index1_tilt_trim_, 1);

}

}

The pan_tilt_node.h file contains the definitions for our PanTiltNode class.

Having completed the pan tilt package, the last coding task is to write the Arduino sketch. The sketch contains many of the elements used in the pan/tilt node. Our sketch is based on the servo tutorial for rosserial, but we need to modify it to access more than one servo and subscribe to our user-defined message.

Each Arduino sketch includes a setup and loop procedure. Our setup procedure initializes the node and subscribes to the servo topic. The remainder of the setup procedure attaches the pins 9, 6, 5, and 10 to the four instances of Servo.

The loop procedure simply calls spinOnce and then delays for 1ms. The call to spinOnce will handle the receipt of the topic.

Attached to the receipt of the servo topic is the callback function servo_cb. This function will be called each time the servo topic message is received, and it then simply adjusts the PWM output for the indexed servo.

/*

Based on the rosserial Servo Control Example

This version controls up to four RC Servos

The node subscribes to the servo topic and acts on a rodney_msgs::servo_array message.

This message contains two elements, index and angle. Index references the servos 0-3, and

angle is the angle to set the servo to 0-180.

D5 -> PWM servo indexed 2

D6 -> PWM servo indexed 1

D9 -> PWM servo indexed 0

D10 -> PWM servo indexed 3

*/

#if (ARDUINO >= 100)

#include <Arduino.h>

#else

#include <WProgram.h>

#endif

#include <Servo.h>

#include <ros.h>

#include <servo_msgs/servo_array.h>

/* Define the PWM pins that the servos are connected to */

#define SERVO_0 9

#define SERVO_1 6

#define SERVO_2 5

#define SERVO_3 10

ros::NodeHandle nh;

Servo servo0;

Servo servo1;

Servo servo2;

Servo servo3;

void servo_cb( const servo_msgs::servo_array& cmd_msg)

{

/* Which servo to drive */

switch(cmd_msg.index)

{

case 0:

nh.logdebug("Servo 0 ");

servo0.write(cmd_msg.angle); //set servo 0 angle, should be from 0-180

break;

case 1:

nh.logdebug("Servo 1 ");

servo1.write(cmd_msg.angle); //set servo 1 angle, should be from 0-180

break;

case 2:

nh.logdebug("Servo 2 ");

servo2.write(cmd_msg.angle); //set servo 2 angle, should be from 0-180

break;

case 3:

nh.logdebug("Servo 3 ");

servo3.write(cmd_msg.angle); //set servo 3 angle, should be from 0-180

break;

default:

nh.logdebug("No Servo");

break;

}

}

ros::Subscriber<servo_msgs::servo_array> sub("servo", servo_cb);

void setup()

{

nh.initNode();

nh.subscribe(sub);

servo0.attach(SERVO_0); //attach it to the pin

servo1.attach(SERVO_1);

servo2.attach(SERVO_2);

servo3.attach(SERVO_3);

// Defaults

servo0.write(90);

servo1.write(120);

}

void loop(){

nh.spinOnce();

delay(1);

}

Implementing the Code

Before we can compile the sketch and program the Arduino, we need to build our ROS packages and recompile the ROS Arduino library. This step is crucial to make our user-defined message, servo_array, available in the Arduino IDE.

For this tutorial, I will be using a Linux workstation to run the Arduino IDE. I will build our packages on both the workstation and the Raspberry Pi. Although we are not utilizing any dedicated Raspberry Pi hardware at this stage, you can opt to run the nodes entirely on a workstation. I will run the nodes on the Raspberry Pi and run the test tools on the workstation, but you can choose to run the test tools on the Pi if you prefer. To distinguish between the Pi and the workstation in the instructions below, I have created a directory (workspace) called "ease_robot_ws" on the Pi and "test_ws" on the workstation.

Building the ROS Packages on the Workstation

ROS employs the catkin build system. To begin, we will create a catkin workspace and initialize the workspace. In a command terminal, enter the following commands:

$ mkdir -p ~/test_ws/src

$ cd ~/test_ws/

$ catkin_make

Next, copy the two package folders, pan_tilt and servo_msgs, into the ~/test_ws/src folder and build the code using the following commands:

$ cd ~/test_ws/

$ catkin_make

Verify that the build completes without any errors.

Building the Arduino ROS Library

I have the Arduino IDE installed on the workstation, which created an Arduino folder in my home directory containing a subdirectory "libraries". Note that when regenerating the library, you must delete the ros_lib folder using "rm -rf ros_lib" from within the "libraries" directory.

Use the following commands to build the ros_lib library:

$ source ~/test_ws/devel/setup.bash

$ cd ~/Arduino/libraries

$ rm -rf ros_lib

$ rosrun rosserial_arduino make_libraries.py.

Verify that the build completes without any errors and check that the servo_array.h file was created in the ~/Arduino/libraries/ros_lib/servo_msgs folder.

Building the servo Sketch and Programming the Arduino

Copy the ease_robot_control folder to the ~/Arduino/Projects folder. Start the Arduino IDE and open the ease_robot_control.ino file. From the Tools->Board menu, select the Arduino board you are using. In my case, it's the Nano. From the Tools->Processor menu, select the processor. In my case, it's the ATmega328P (Old Bootloader).

Build the sketch and check for any errors.

To program the Arduino, connect the device to a workstation USB port. In the IDE, from the Tools->Port menu, select the serial port that the Arduino is connected to. In my case, it's /dev/ttyUSB0.

Next, upload the sketch to the Arduino and verify that there are no errors reported.

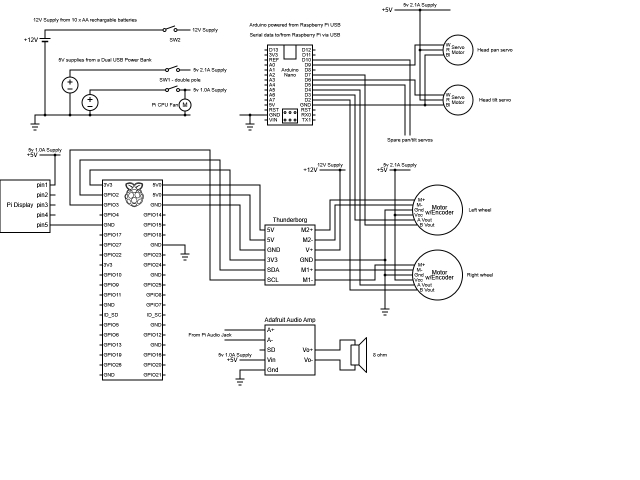

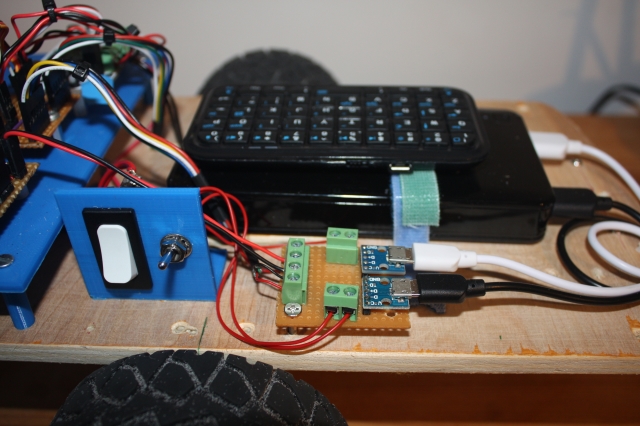

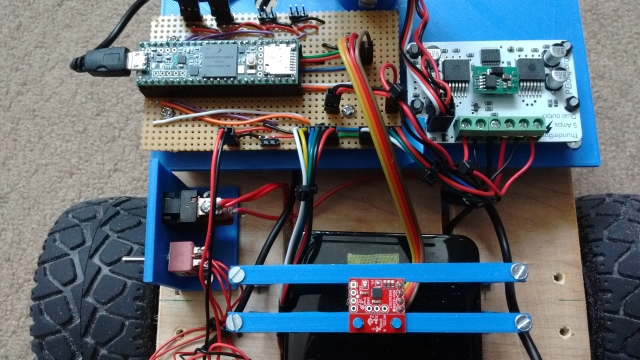

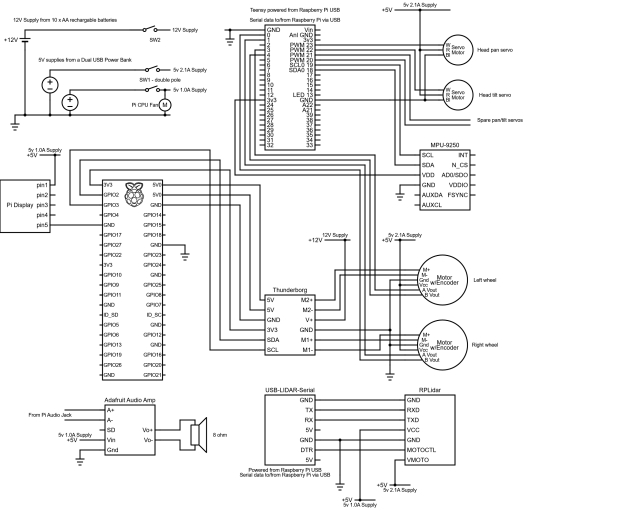

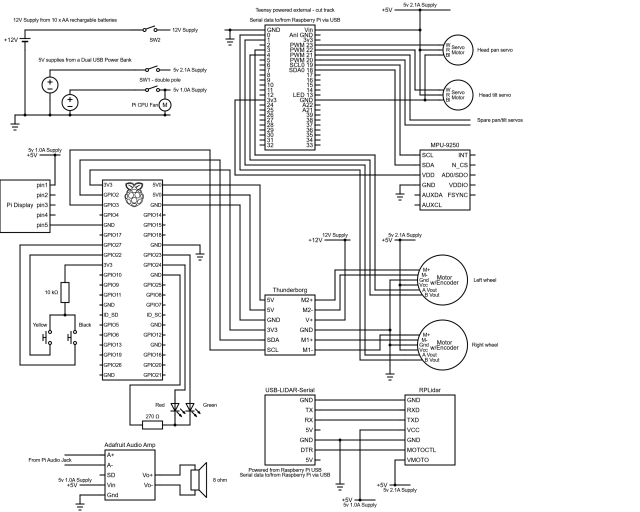

Arduino Circuit

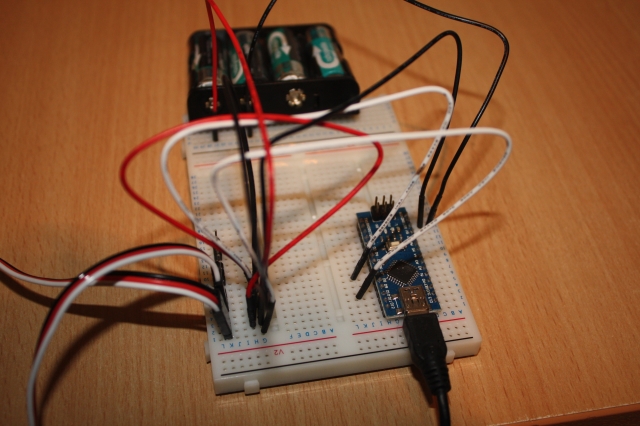

When building EaseRobot, we need to consider power management. For now, I will power the Arduino using the USB port of the Raspberry Pi, while the servos will be powered from 4xAA rechargeable batteries. Below is a test circuit that illustrates the servo connections and power supply to the servos.

To test the software, I will build the circuit on a breadboard and connect only the servos for the head pan and tilt device.

Building the ROS Packages on the Raspberry Pi

Create a catkin workspace and initialize the workspace. In a command terminal, enter the following commands:

$ mkdir -p ~/ease_robot_ws/src

$ cd ~/ease_robot_ws/

$ catkin_make

Copy the two package folders, pan_tilt and servo_msgs, into the ~/ease_robot_ws/src folder and then build the code using the following commands:

$ cd ~/ease_robot_ws/

$ catkin_make

Verify that the build completes without any errors.

Tip

When running ROS code and tools on a workstation and the Raspberry Pi, you may encounter repetitive typing of commands in multiple terminals. To simplify this process, I have included the full commands to type below. Here are a few tips to save you from excessive typing:

On the Raspberry Pi, to avoid typing "source devel/setup.bash", I have added it to the.bashrc file.

$ cd ~/

$ nano.bashrc

Then add "source /home/ubuntu/ease_robot_ws/devel/setup.bash" to the end of the file, save, and exit.

When running test code and tools on the workstation, it needs to know where the ROS master is located. I have added the following to the.bashrc file for the workstation:

alias ease_robot='source ~/test_ws/devel/setup.bash;

export ROS_MASTER_URI=http://ubiquityrobot:11311'

By simply typing "ease_robot" at a terminal, the two commands are executed, saving you from repetitive typing.

Running the Code

Now that we have set up our code, we are ready to run it. With the Arduino connected to a USB port of the Raspberry Pi, use the launch file to start the nodes with the following commands. If no master node is running in the system, the launch command will also launch the master node, roscore.

$ cd ~/ease_robot_ws/

$ source devel/setup.bash

$ roslaunch pan_tilt pan_tilt_test.launch

In the terminal, you should see:

- A list of parameters now in the parameter server

- A list of the nodes, which should show pan_tilt_node and serial_node

- The address of the master

- The starting of the two nodes

- Log information from our code

We can now use some of the ROS tools to examine, interact, and test the system.

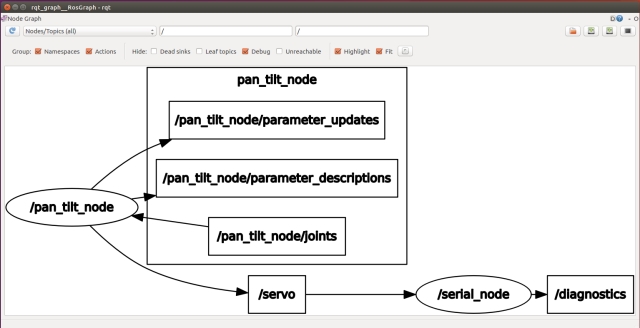

To test that the expected nodes are running and connected using the topics, open a command terminal on the workstation and type the following command:

$ cd ~/test_ws

$ source devel/setup.bash

If you launched the nodes on one device, for example, the Raspberry Pi, and want to run the tools on a second device, you need to tell the second device where to find the master. In the same terminal, type:

$ export ROS_MASTER_URI=http://ubiquityrobot:11311

Now, in the same terminal, start the graph tool:

$ rqt_graph

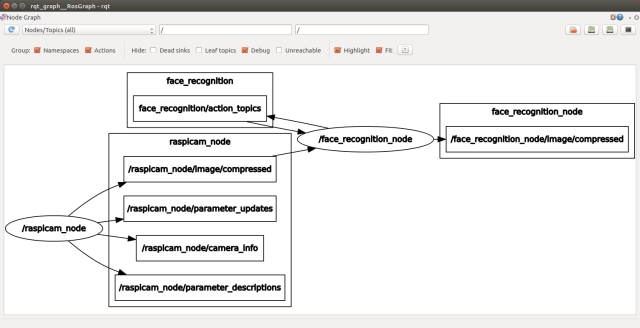

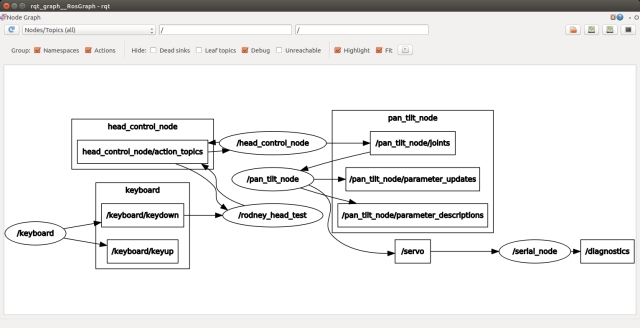

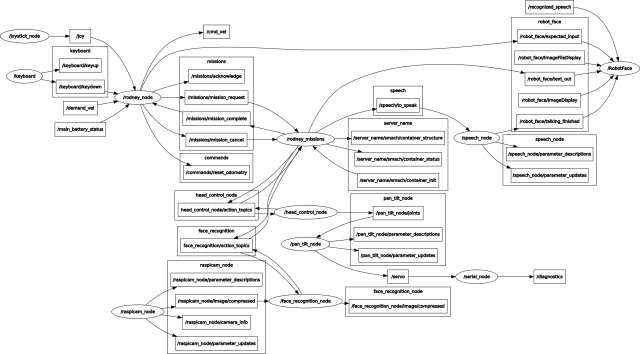

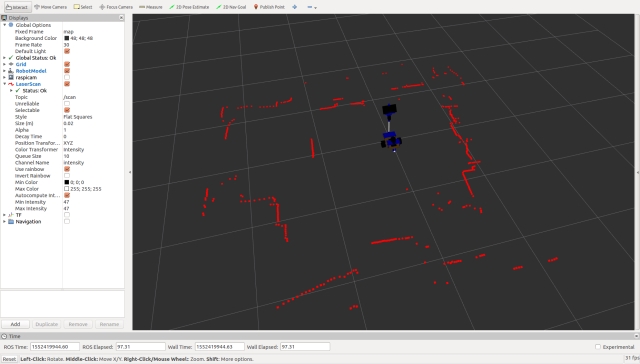

From the graph, you can see that the two nodes are running and are connected by the /servo topic. You can also see the topic /pan_tilt_node/joints.

We will now open a second terminal on the workstation and send a message to move the pan/tilt device using rostopic. In a new terminal, enter the following commands, don't forget to give the location of the master if running on a different device to that you launched the nodes on.

$ cd ~/test_ws

$ source devel/setup.bash

$ export ROS_MASTER_URI=http://ubiquityrobot:11311

$ rostopic pub -1 /pan_tilt_node/joints sensor_msgs/JointState

'{header: {seq: 0, stamp: {secs: 0, nsecs: 0}, frame_id: ""},

name: [ "head_pan","tilt_pan"], position: [0,0.349066], velocity: [], effort: []}'

The last command will result in rostopic publishing one instance of the /pan_tilt_node/joints topic of message type sensor_msgs/JointState with the pan position 0 radians and the tilt position 0.349066 radians. If all worked fine, the servos will move to the position given. Note that at this stage of the project, the servos move straight to the new position. In the next article, we will add a node that will move the head in a more controlled manner.

It can be a bit long-winded to type the rostopic command. Alternatively, you can use rqt GUI. In the terminal, type:

$ rosrun rqt_gui rqt_gui

This will launch a window where you can select the Message Publisher, choose the message to publish, and the message fields' contents.

Due to the mechanical fittings of the pan/tilt device, it may be off-center by a number of degrees. You can trim the servos with the following procedure:

Set the position of both servos to the mid positions.

$ rostopic pub -1 /pan_tilt_node/joints sensor_msgs/JointState

'{header: {seq: 0, stamp: {secs: 0, nsecs: 0}, frame_id: ""},

name: [ "head_pan","tilt_pan"], position: [0,0], velocity: [], effort: []}'

In a new terminal, start rqt_reconfigure with the following commands, don't forget to give the location of the master if running on a different device.

$ cd ~/test_ws

$ source devel/setup.bash

$ export ROS_MASTER_URI=http://ubiquityrobot:11311

$ rosrun rqt_reconfigure rqt_reconfigure

This will bring up a user interface like the one shown below. Trim parameters can be dynamically adjusted via the interface.

Once you are happy with the trim values, you can edit the pan_tilt.cfg to include the new trim values as the defaults. Then, the next time the nodes are started, these trim values will be used.

To terminate the nodes, simply hit Ctrl-c in the terminal.

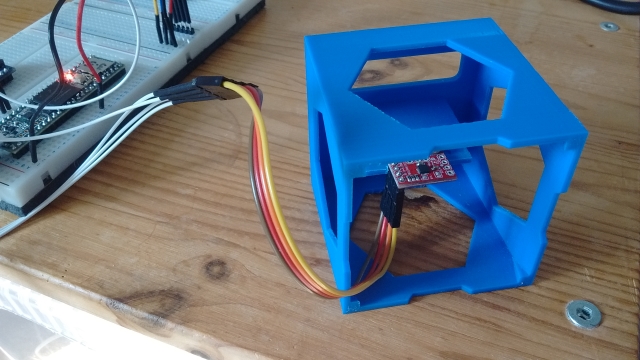

Pan-Tilt Mechanism

The EaseRobot's pan-tilt mechanism is comprised of two high-quality Futaba servos, specifically the S3003 and S3305 models. The S3305 servo, featuring metal gears, is employed in the pan position to ensure smooth and precise movement. Instead of purchasing a pre-made pan-tilt device, I opted to design and 3D print my own custom solution, with the STL files available for download. To mitigate the risk of the combined weight of the display and Raspberry Pi exerting excessive torque on the pan servo shaft, I incorporated a load-bearing servo block into the design. This innovative solution effectively enhances the mechanical load capacity of the servo, ensuring reliable operation. While this approach added to the overall cost of the robot, an alternative would be to mount the camera on a smaller pan-tilt device and fix the screen in place.

Key Takeaways

In this installment, we successfully integrated a ROS node from the broader ROS community into our EaseRobot system and developed our own custom ROS node. We have ROS running on the Raspberry Pi master board and have also leveraged the Arduino Nano to offload certain functionalities.

In the next installment, we will continue to work towards Design Goal 1 by integrating a Python-based face recognition library wrapped in a ROS node and developing a node to control the head movement.

Enhancing Our House Bot with Face Recognition and Head Control. In this installment of our ROS (Robot Operating System) House Bot series, we'll build upon the foundation established in Part 1 by incorporating face recognition and head control capabilities to achieve our Design Goal 1.

Introduction

The EaseRobot project is a hobbyist robotic endeavor aimed at designing and building an autonomous house-bot. This article is the second in the series, detailing the project's progress.

Background

In Part 1, we defined the requirements for our robot and broke down our mission into manageable Design Goals. Our mission, inspired by the article "Let's build a robot!", involves creating a robot that can take messages to family members. This capability will enable the robot to recognize individuals, navigate to their location, and deliver personalized messages.

The Design Goals for this mission are:

- To use the camera to search for faces, identify people, and display a message for recognized individuals

- To enable facial expressions and speech synthesis for message delivery

- To control locomotion using a remote keyboard and/or joystick

- To integrate a laser ranger finder or similar ranging sensor for navigation

- To achieve autonomous locomotion

- To assign and complete tasks with notification

In Part 1, we utilized ROS to add pan/tilt functionality to the head and camera. Here, we'll focus on adding face recognition and control nodes to complete Design Goal 1.

Mission 1, Design Goal 1 Continued

Accessing Images from the Raspberry Pi Camera

We'll leverage the ROS community's existing work to simplify our development process. The Raspberry Pi Ubuntu image includes a ROS package called raspicam_node, which we'll use to access the camera. If you're using a different OS image, you can install the node from the GitHub site.

To add the node to our system, we'll include a supplied ROS launch file in our launch file. We'll use an image resolution of 1280 x 960 pixels, so we'll add the following to our launch file:

<include file="$(find raspicam_node)/launch/camerav2_1280x960.launch" />

ROS uses its own image format to pass images between nodes. We'll need to convert ROS images to OpenCV images and back again using the cv_bridge package.

We'll write the face recognition node in Python, which will give us examples in both languages. We'll also utilize a Python face recognition library.

Detecting and Recognizing Faces

Before the system can recognize faces, we need to train it with the subjects we wish to recognize. We'll create two non-ROS Python scripts: data_set_generator.py and training.py. The first script will capture facial images of each subject using the camera, while the second script will use these images to train the system. The output of the second script is a yaml file containing the training data, which the ROS node will load during initialization.

Our ROS package for the node is called face_recognition and is available in the face_recognition folder. The subfolder scripts contains our two training scripts.

Each script utilizes face detection and face recognition built into OpenCV. If you're interested in understanding how this works, I recommend reading articles on the internet. I'll provide a high-level description of each script, starting with data_set_generator.py.

After the required imports, we load the classifier using the OpenCV library, declare a helper function to ensure that required folders exist, and create folders to hold captured images and training data

Next, we will guide you through the process of capturing face data for the EaseRobot project. This involves setting up the camera, creating a window to display the image, and prompting the user for input.

First, we set the camera resolution and initialize some variables, including the file name that stores our list of subjects. We then open the file and create a window to display the image read from the camera, allowing the subject to position themselves within the camera's field of view.

Next, the script prompts the user to enter the subject's unique ID, name, and whether it is a low-light condition. The unique IDs should start at 1 and increment by 1 for each new subject. It is recommended to run this script twice for each subject, once in bright light and once in low light conditions, to improve the recognition algorithm's success rate. Each run of the script will capture 100 images of the subject, with file names constructed from the subject ID and image number.

The script then adds the subject to the names file if they don't already exist.

with picamera.PiCamera() as camera:

camera.resolution = (1280, 960)

looping = True

count = 0

end = 99

names_dict = {}

name_file = '../trainer/names.yml'

# Open the file of IDs and names to append the new one to

if os.path.exists(name_file):

with open(name_file, 'r') as stream:

names_dict = yaml.load(stream)

cv2.namedWindow('frame', cv2.WINDOW_NORMAL)

face_id = input("What is this person's ID number? ")

name = input("What is this person's name? ")

low_light = input("Low light Y/N?" )

if low_light == 'Y' or low_light == 'y':

count = 100

end = 199

# If not already in the dictionary add details

if not face_id in names_dict:

names_dict[int(face_id)]=name

with open(name_file, 'w') as outfile:

yaml.dump(names_dict, outfile, default_flow_style=False)

The script then enters a loop to capture the images. Each pass of the loop captures an image from the camera, converts it to a numpy array, and attempts to detect a face in the image using OpenCV. If a face is detected, the image is cropped around the face, the number of image samples is incremented, and the cropped grey scale image is stored in the dataset folder. The original image from the camera, along with a superimposed frame around the face, is displayed to the user.

while(looping):

# Create a memory stream so image doesn't need to be saved to a file

stream = io.BytesIO()

camera.capture(stream, format='jpeg')

#Convert picture to numpy array

buff = numpy.fromstring(stream.getvalue(), dtype=numpy.uint8)

# Now create an OpenCV image

image_frame = cv2.imdecode(buff, 1)

# Convert frame to grayscale

gray = cv2.cvtColor(image_frame, cv2.COLOR_BGR2GRAY)

# Detect frames of different sizes, list of faces rectangles

faces = face_detector.detectMultiScale(gray, 1.3, 5)

# Although faces could contain more than one face we only expect one

# person to be in the data set image otherwise it would confuse

# the whole thing

if (len(faces)!= 0):

# Expecting one face only on the data set image

(x, y, w, h) = faces[0]

# Crop the image frame into rectangle

cv2.rectangle(image_frame, (x,y), (x+w,y+h), (255,0,0), 4)

# Increment sample face image

count += 1

# Save the captured image into the datasets folder

cv2.imwrite("dataset/User." + str(face_id) + '.' + str(count) + ".jpg", gray[y:y+h,x:x+w])

# Display the video frame, with bounded rectangle on the person's face

cv2.imshow('frame', image_frame)

# To stop taking video, press 'q' for at least 100ms

if cv2.waitKey(100) & 0xFF == ord('q'):

looping = False

# If image taken reach 100, stop taking video

elif count>end:

looping = False

Finally, the script closes the window displaying the image and prints a message indicating that the process is complete.

# Close all started windows

cv2.destroyAllWindows()

print("Data prepared")

Once you have run the script for each subject, you can then run the training.py script to train the face recognition model.

The training.py script starts by importing the necessary libraries and defining the assure_path_exists function. It then creates instances of the OpenCV classes LBPHFaceRecognizer_create and CascadeClassifier using the same classifier file.

import cv2

import os

import numpy as np

def assure_path_exists(path):

dir = os.path.dirname(path)

if not os.path.exists(dir):

os.makedirs(dir)

# Create Local Binary Patterns Histograms for face recognition

recognizer = cv2.face.LBPHFaceRecognizer_create()

# Using prebuilt frontal face training model, for face detection

detector = cv2.CascadeClassifier("../classifiers/haarcascade_frontalface_default.xml");

The get_images_and_labels function reads in each stored image, detects the face, and obtains the ID from the file name.

# Create method to get the images and label data

def get_images_and_labels(path):

# Get all file paths

image_paths = [os.path.join(path,f) for f in os.listdir(path)]

# Initialize empty face samples

face_samples=[]

# Initialize empty IDs

ids = []

# Loop all the file paths

for image_path in image_paths:

# The stored image is grayscale so read in grayscale

gray = cv2.imread(image_path, cv2.IMREAD_GRAYSCALE)

# Get the image ID

id = int(os.path.split(image_path)[-1].split(".")[1])

# Get the face from the training images

# Don't need any scaling as these images already full face

faces = detector.detectMultiScale(gray);

# During testing not always detected face on image, which

# is odd as it should be just an image that was saved

if (len(faces) == 0):

print "No face on " + image_path

else:

# We know each image is only of one face

(x, y, w, h) = faces[0]

# Add the image to face samples

face_samples.append(gray[y:y+h,x:x+w])

# Add the ID to IDs

ids.append(id)

# Pass the face array and IDs array

return face_samples,ids

Once all the faces and IDs are obtained, they are passed to the OpenCV face recognizer, and the data from the recognizer is saved to disk. The face recognition library that will be used by our node will later load this data to train the recognizer.

# Get the faces and IDs

faces,ids = get_images_and_labels('dataset')

# Train the model using the faces and IDs

recognizer.train(faces, np.array(ids))

# Save the model into trainer.yml

assure_path_exists('../trainer/')

recognizer.save('../trainer/trainer.yml')

print("Done")

The code for the ROS node itself is in the subfolder src in the file face_recognition_node.py. The code makes use of a library file, face_recognition_lib.py, which contains the class FaceRecognition. This file is in the subfolder src/face_recognition_lib.

Before describing the code for the node, we'll discuss the FaceRecognition class. After the required imports and the declaration of the class, it defines a number of functions.

The class constructor creates the OpenCV face recognizer and then reads the training file created by the training script. It then opens the file containing the list of names and the IDs, and creates the classifier. It finally stores a confidence value passed to it. This value will be used to determine if the suggested ID for the face is accepted.

def __init__(self, path, confidence):

# Create Local Binary Patterns Histograms for face recognition

self.__face_recognizer = cv2.face.LBPHFaceRecognizer_create()

# Load the trained model

self.__face_recognizer.read(path + '/trainer/trainer.yml')

# Load the names file

with open(path + '/trainer/names.yml', 'r') as stream:

self.__names_dict = yaml.load(stream)

# Detect object in image using Haarcascade Frontal Face

self.__face_detector = cv2.CascadeClassifier

(path + '/classifiers/haarcascade_frontalface_default.xml')

# Confidence level,

# the confidence of the system in recognising a face must be greater than

# this level to be accepted by the system as a recognised face.

self.__confidence_level = confidence

Two functions are declared which will be used to modify the captured image if a face is detected. The first will draw a rectangle on the image, and the second will draw the supplied text on the image.

# Function to draw rectangle on image according to given (x, y) coordinates

# and the given width and height

def draw_rectangle(self, img, rect, bgr):

(x, y, w, h) = rect

cv2.rectangle(img, (x, y), (x+w, y+h), bgr, 4)

# Function to draw text on give image starting at the passed (x, y) coordinates.

def draw_text(self, img, text, x, y, bgr):

cv2.putText(img, text, (x, y), cv2.FONT_HERSHEY_PLAIN, 3.0, bgr, 4)

The detect_faces function is responsible for detecting faces in a supplied image. It converts the image to grayscale, allowing OpenCV to detect faces. If faces are detected, the function returns the face data and their locations in the image. This function is designed to handle multiple faces in a single image.

def detect_faces(self, img):

face_data = []

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces_detected = self.__face_detector.detectMultiScale(gray, 1.3, 5)

if len(faces_detected) == 0:

return None, None

for face in faces_detected:

(x, y, w, h) = face

face_data.append(gray[y:y+w, x:x+h])

return face_data, faces_detected

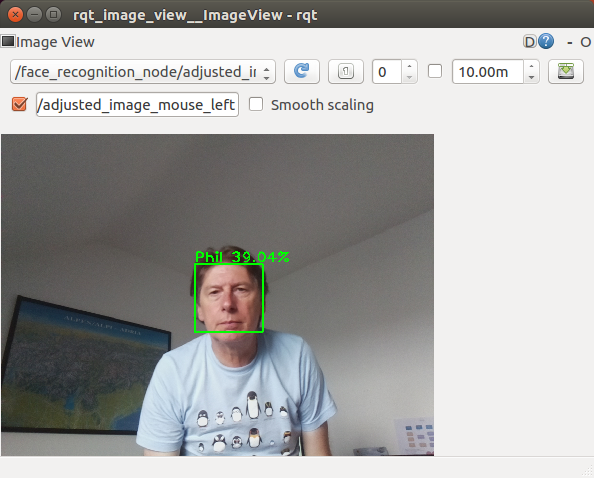

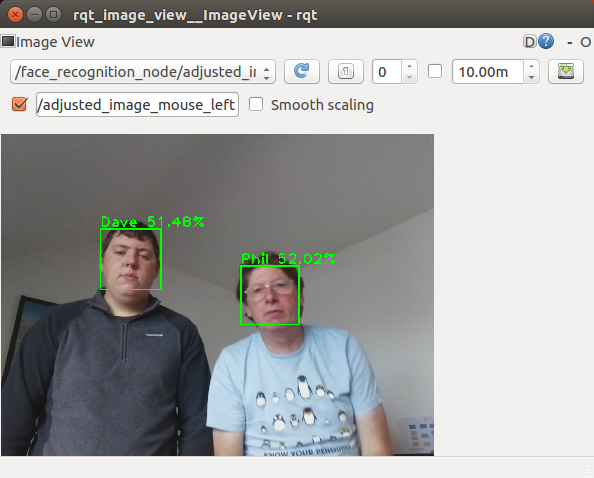

The scan_for_faces function is the primary entry point for face detection and recognition. It calls the detect_faces function and, if faces are detected, loops through each face, using OpenCV's face predictor to recognize the individual. The confidence level of the prediction is converted to a percentage and, if it exceeds a predefined threshold, the face is highlighted in green; otherwise, it is highlighted in red. The function returns a dictionary containing the IDs and names of recognized individuals.

def scan_for_faces(self, img):

faces, rects = self.detect_faces(img)

detected_dict = {}

if faces is not None:

for index in range(len(faces)):

label, confidence = self.__face_recognizer.predict(faces[index])

our_confidence = round(100 - confidence, 2)

name_text = self.__names_dict[label]

name_text_confidence = name_text + " {0:.2f}%".format(our_confidence)

if our_confidence > self.__confidence_level:

colour = (0, 255, 0)

else:

colour = (0, 0, 255)

self.draw_rectangle(img, rects[index], colour)

self.draw_text(img, name_text_confidence, rects[index,0], rects[index,1]-5, colour)

if our_confidence > self.__confidence_level:

detected_dict[label] = name_text

return detected_dict

The ROS node initializes the FaceRecognitionNode class, which creates an instance of CVBridge to convert ROS images to OpenCV images. It publishes the topic face_recognition_node/image/compressed and subscribes to the topic raspicam_node/image/compressed. The node reads the confidence threshold from the parameter server and sets it to 20% by default.

def main(args):

rospy.init_node('face_recognition_node', anonymous=False)

frn = FaceRecognitionNode()

rospy.loginfo("Face recognition node started")

try:

rospy.spin()

except KeyboardInterrupt:

print("Shutting down")

if __name__ == '__main__':

main(sys.argv)

The FaceRecognitionNode class is responsible for face detection and recognition, and its constructor initializes the necessary components for this functionality.

class FaceRecognitionNode:

def __init__(self):

self.__bridge = CvBridge()

self.__image_pub = rospy.Publisher('face_recognition_node/image/compressed',

CompressedImage, queue_size=1)

self.__image_sub = rospy.Subscriber('raspicam_node/image/compressed',

CompressedImage, self.callback)

confidence_level = rospy.get_param('/face_rec_python/confidence_level', 20)

rospy.loginfo("FaceRecognitionNode: Confidence level %s", str(confidence_level))

# Create the face_recognition_lib class instance

self.__frc = face_recognition_lib.FaceRecognition(

(roslib.packages.get_pkg_dir('face_recognition', required=True), confidence_level)

)

# Create the Action server

self.__as = actionlib.SimpleActionServer(

'face_recognition', scan_for_facesAction, self.do_action, False

)

self.__as.start()

The do_action function is called by the action server when a request to conduct the action is received. It converts the last received image from a ROS image to an OpenCV image, scans the image for known faces using the scan_for_faces function, and publishes the adjusted image on the face_recognition_node/image/compressed topic.

def do_action(self, goal):

# Scan the current image for faces recognised

image = self.__bridge.compressed_imgmsg_to_cv2(self.__current_image)

detected_dict = self.__frc.scan_for_faces(image)

try:

self.__image_pub.publish(self.__bridge.cv2_to_compressed_imgmsg(image))

except CvBridgeError as e:

print(e)

# Now post a message with the list of IDs and names

ids = []

names = []

for k, v in detected_dict.items():

ids.append(k)

names.append(v)

# Set result for the action

result = scan_for_facesResult()

result.ids_detected = ids

result.names_detected = names

self.__as.set_succeeded(result)

The callback function is called each time a message is received on the raspicam/image/compressed topic. It simply stores the current image for facial recognition.

def callback(self, data):

# Each time we receive an image we store it ready in case then asked to scan it

self.__current_image = data

The node package includes a config.yaml file for setting the confidence level without recompiling the code. Additionally, a test.launch file is provided for testing the node, which launches the camera node and the face recognition node.

Face Recognition Action

The face recognition package utilizes a user-defined action message to initiate the operation and return the results of identifying known faces. The face_recognition_msgs package contains the scan_for_faces.action file, which is located in the action subfolder.

The action specification consists of three main sections: goal, result, and feedback. It resembles a message definition file, with each section separated by three dashes (---).

uint16[] ids_detected

string[] names_detected

The goal section, above the first three dashes, does not require any parameters. The receipt of the goal will trigger the action.

The result section, below the first three dashes, contains an array of IDs and an array of names for any recognized faces.

The feedback section, below the second three dashes, does not provide any feedback in this case.

Controlling the Head

We have now developed a node to perform facial recognition on an image from the camera and, from part 1 of this article, we have the pan/tilt functionality to move the servos connected to the head. We will create a new node that accepts a target position for the head, but moves the head to that target in incremental steps to prevent the robot from rocking when the head moves from one extreme to the other. The node will also accept both absolute positions and relative distances to move from the current position.

Our ROS package for this node is called head_control and is located in the head_control folder. The package contains all the usual ROS files and folders.

The action folder contains the point_head.action file, which defines an action that passes a goal containing the target position and feedback on the current position of the head.

bool absolute

float64 pan

float64 tilt

float64 current_pan

float64 current_tilt

The goal contains pan and tilt values in radians, as well as a boolean flag absolute. If absolute is true, the pan and tilt values represent the absolute target position. If absolute is false, the values represent the relative distance to move the head.

The feedback values provide the current position of the head as it moves towards the target position.

The config folder contains a config.yaml file that can be used to override default configuration values. You can configure:

The default position of the head The maximum value the pan and tilt device should move per request, to prevent the servo from moving a large angle in one step and causing the head to shudder

head:

position:

pan: 0.0

tilt: 0.0

max_step:

pan: 0.0872665

tilt: 0.0872665

The include/head_control and src folders contain the C++ code for the package. We have one C++ class, HeadControlNode, and a main routine within the head_control_node.cpp file.

The main routine informs ROS of our node, creates an instance of the class for the node, and passes it the node handle and node name. For the first time in this project, we will not hand total control of the node to ROS. Instead, we will retain control to move the servos in small incremental steps to a target position.

Before entering the loop, we create an instance of ros::Rate and pass it the desired timing, in this case, 10Hz. Inside the loop, we call r.sleep, which attempts to maintain the loop at 10Hz by accounting for the time used to complete the work in the loop.

Our loop will continue until the call to ros::ok returns false, indicating that the node has finished shutting down.

In the loop, we will call moveServo, which is described later in the article.

int main(int argc, char **argv)

{

ros::init(argc, argv, "head_control_node");

ros::NodeHandle n;

std::string node_name = ros::this_node::getName();

HeadControlNode head_control(n, node_name);

ROS_INFO("%s started", node_name.c_str());

// We need control of the node to step the servos to the target position in small steps

ros::Rate r(10); // 10Hz

while(ros::ok())

{

// Check if the servos need moving

head_control.moveServo();

ros::spinOnce();

r.sleep();

}

return 0;

}

The rest of the file contains the HeadControlNode class.

The constructor for HeadControlNode registers the callback function pointHeadCallback with the action server. This callback will be called when the action server receives the action goal, initiating the action.

The constructor for the HeadControlNode class initializes the action server and sets up the node's configuration. It starts the server with the as_.start() call.

The constructor advertises that it will publish the pan_tilt_node/joints topic, which will be used to pass the required pan/tilt position to the pan/tilt node.

// Constructor

HeadControlNode::HeadControlNode(ros::NodeHandle n, std::string name) : as_(n, name, false)

{

nh_ = n;

as_.registerGoalCallback(boost::bind(&HeadControlNode::pointHeadCallback, this));

as_.start();

// Topic to move head

move_head_pub_ = nh_.advertise<sensor_msgs::JointState>("pan_tilt_node/joints", 10, true);

// Obtain any configuration values from the parameter server.

// If they don't exist use the defaults

// Joint names

nh_.param<std::string>("/servo/index0/pan/joint_name", pan_joint_name_, "reserved_pan0");

nh_.param<std::string>("/servo/index0/tilt/joint_name", tilt_joint_name_, "reserved_tilt0");

// Maximum angle we can move in one go

nh_.param("/head/max_step/pan", pan_step_, 0.174533);

nh_.param("/head/max_step/tilt", tilt_step_, 0.174533);

double pan; // Pan default position to return to

double tilt; // Tilt default position to return to

nh_.param("/head/position/pan", pan, 0.0);

nh_.param("/head/position/tilt", tilt, 0.0);

default_position_.pan = pan;

default_position_.tilt = tilt;

// Set up the message we will publish

msg_.name.push_back(pan_joint_name_);

msg_.name.push_back(tilt_joint_name_);

msg_.position.push_back(0.0);

msg_.position.push_back(0.0);

// We will often return to this position when a task is completed

current_pan_tilt_ = default_position_;

// We don't know where the servo starts from so just jump to the required position

// Publish a start position to get the head in a known position.

publishJointState(current_pan_tilt_);

move_head_ = false;

movement_complete_ = false;

target_pan_tilt_ = current_pan_tilt_;

}

It then sets some configuration defaults and reads any overrides from the parameter server should they be available.

Next, it sets the names of the joints in the joint state message, which will not change.

Finally, it publishes a message to move the head to a known starting point. This is necessary because we don't know the starting position of the head after power-up, so we can't move to the target position in small steps.

// This callback is for the point head action

void HeadControlNode::pointHeadCallback()

{

head_control::point_headGoal::ConstPtr goal;

goal = as_.acceptNewGoal();

// Set the target position to the request position

if (goal->absolute == true)

{

target_pan_tilt_.pan = goal->pan;

target_pan_tilt_.tilt = goal->tilt;

}

else

{

target_pan_tilt_.pan += goal->pan;

target_pan_tilt_.tilt += goal->tilt;

}

// Indicate that the servos should be moved

move_head_ = true;

movement_complete_ = false;

}

The pointHeadCallback function is called by ROS when the action server receives a goal message. The goal data is either the absolute or relative target position, depending on the state of the absolute flag.

The function calls the action server to inform it that the goal has been accepted, stores the new target position, and sets the move_head flag to true, indicating that the head needs to be moved.

// Function to move the servos if required by a step amount.

// This is to stop the head shuddering if the servo

// is moved to the target position in one movement.

void HeadControlNode::moveServo()

{

if(move_head_ == true)

{

if(as_.isPreemptRequested() || !ros::ok())

{

as_.setPreempted();

movement_complete_ = false;

move_head_ = false;

}

else if(movement_complete_ == true)

{

// We have reached the target but give time to settle

loop_count_down_--;

if(loop_count_down_ <= 0)

{

movement_complete_ = false;

move_head_ = false;

head_control::point_headResult result;

as_.setSucceeded(result);

}

}

else

{

if((target_pan_tilt_.pan == current_pan_tilt_.pan) &&

(target_pan_tilt_.tilt == current_pan_tilt_.tilt))

{

// Last time around we must have requested the final move

movement_complete_ = true;

loop_count_down_ = 8;

}

else

{

// Still moving, calculate pan movement

if(std::abs(target_pan_tilt_.pan - current_pan_tilt_.pan) > pan_step_)

{

// Distance to target to great to move in one go

if(target_pan_tilt_.pan > current_pan_tilt_.pan)

{

// Add the step to current

current_pan_tilt_.pan += pan_step_;

}

else

{

// Subtract step from current

current_pan_tilt_.pan -= pan_step_;

}

}

else

{

// Can move to the target position in one go

// (or pan is in fact already there but tilt is not)

current_pan_tilt_.pan = target_pan_tilt_.pan;

}

// Calculate tilt movement

if(std::abs(target_pan_tilt_.tilt - current_pan_tilt_.tilt) > tilt_step_)

{

// Distance to target to great to move in one go

if(target_pan_tilt_.tilt > current_pan_tilt_.tilt)

{

// Add the step to current

current_pan_tilt_.tilt += tilt_step_;

}

else

{

// Subtract step from current

current_pan_tilt_.tilt -= tilt_step_;

}

}

else

{

// Can move to the target position in one go

// (or tilt is in fact already there but pan is not)

current_pan_tilt_.tilt = target_pan_tilt_.tilt;

}

// Publish the movement

publishJointState(current_pan_tilt_);

// Publish feedback

head_control::point_headFeedback feedback;

feedback.current_pan = current_pan_tilt_.pan;

feedback.current_tilt = current_pan_tilt_.tilt;

as_.publishFeedback(feedback);

}

}

}

}

The moveServo function is called by the main loop in our code. It checks to see if a request to move the head was made and, if so, enters an 'if', 'else if', 'else' construct.

The 'if' part of this construct checks to see if the action has been pre-empted. If so, it accepts the pre-emption and tidies up.

The 'else if' part checks to see if the head movement is complete. If so, a counter is decremented. This counter is used to include time for the head to stop moving and blurring any camera images after the servos reach the target position. When the counter reaches zero, the fact that the action is complete is reported to the action server.

The 'else' part is responsible for calculating the next step movement of the servos towards the target position, publishing the joint state message with the next required servo position using the helper function publishJointState, and reporting the feedback to the action server.

// This function creates and publishes a joint state message

void HeadControlNode::publishJointState(struct position pan_tilt)

{

msg_.position[0] = pan_tilt.pan;

msg_.position[1] = pan_tilt.tilt;

msg_.header.stamp = ros::Time::now();

move_head_pub_.publish(msg_);

}

The publishJointState function is a helper function that updates the position values in the joint state message and then publishes the message.

This file, test.launch, will launch all the nodes developed to move the head.

<?xml version="1.0" ?>

<launch>

<rosparam command="load" file="$(find pan_tilt)/config/config.yaml" />

<rosparam command="load" file="$(find head_control)/config/config.yaml" />

<node pkg="pan_tilt" type="pan_tilt_node" name="pan_tilt_node" output="screen" />

<node pkg="rosserial_python" type="serial_node.py"

name="serial_node" output="screen" args="/dev/ttyUSB0"/>

<node pkg="head_control" type="head_control_node" name="head_control_node" output="screen"/>

</launch>

Action Client

In our previous sections, we explored the concept of action servers in both our nodes. Now, we will delve into the world of action clients, which enable communication with the server. Later in this article, we will introduce a ROS package that allows us to create state machines and sub-state machines to control our robot missions. Using this package, we can assign an individual state to be the action client, and all communication is handled seamlessly behind the scenes.

To test the system we have developed so far and to demonstrate how to write an action client, we will create two test nodes. Each node will include an action client.

Our first node is a simple Python node designed to test the face recognition node. The ROS package for this node is called ease_robot_recognition_test and is available in the ease_robot_recognition_test folder. The package contains all the usual ROS files and folders.

All the code is contained in the ease_robot_recognition_test_node.py file in the src folder.

The code initializes our node and creates an action client. Note that the name passed to the SimpleActionClient, in our case 'face_recognition', must match the name given to the action server.

We then call wait_for_server, and the code will wait here until it is able to make contact with the server. We then create a goal, which in this case contains no data, and send the goal to the server.

In our simple example, we then wait until the result is returned, and the node finishes by printing the ID and names of any faces recognized and returned in the result.

#!/usr/bin/env python

import rospy

import actionlib

from face_recognition_msgs.msg import scan_for_facesAction,

scan_for_facesGoal, scan_for_facesResult

rospy.init_node('face_recognition_client')

client = actionlib.SimpleActionClient('face_recognition', scan_for_facesAction)

client.wait_for_server()

goal = scan_for_facesGoal()

client.send_goal(goal)

client.wait_for_result()

result = client.get_result()

print(result.ids_detected)

print(result.names_detected)

Our next package is designed to test the head_control node. We will write a slightly more complicated node, this time written in C++.

Our ROS package is called ease_robot_head_test and is available in the ease_robot_head_test folder. The package contains all the usual ROS files and folders.

The include/ease_robot_head_test and src folders contain the C++ code for the package. For this package, we have one C++ class, EaseRobotHeadTestNode, and a main routine contained within the ease_robot_head_test_node.cpp file.

The main routine informs ROS of our node, creates an instance of the class for the node, and passes it the node handle, logs that the node has started, and hands control to ROS with the call to ros::spin.

int main(int argc, char **argv)

{

ros::init(argc, argv, "ease_robot_head_test");

ros::NodeHandle n;

EaseRobotHeadTestNode ease_robot_head_test_node(n);

std::string node_name = ros::this_node::getName();

ROS_INFO("%s started", node_name.c_str());

ros::spin();

return 0;

}

The constructor creates an instance of our action client, ac_, and passes it the name of the action server, which in our case is head_control_node. This must match the name we gave to our action server when we created it in the HeadControlNode constructor.

We then read the config parameters to limit the movement of the servos.

We are going to use a keyboard node, available from https://github.com/lrse/ros-keyboard, to interact with the system. In the constructor, we subscribe to the topic keyboard/keydown and call the function keyboardCallBack when a message is received on that topic.

The call ac_.waitForServer will wait in the constructor until our action server is running.

// Constructor

EaseRobotHeadTestNode::EaseRobotHeadTestNode(ros::NodeHandle n) : ac_("head_control_node", true)

{

nh_ = n;

// Subscribe to receive keyboard input

key_sub_ = nh_.subscribe("keyboard/keydown", 100,

&EaseRobotHeadTestNode::keyboardCallBack, this);

nh_.param("/servo/index0/pan/max", max_pan_radians_, M_PI/2.0);

nh_.param("/servo/index0/pan/min", min_pan_radians_, -(M_PI/2.0));

nh_.param("/servo/index0/tilt/max", max_tilt_radians_, M_PI/2.0);

nh_.param("/servo/index0/tilt/min", min_tilt_radians_, -(M_PI/2.0));

ROS_INFO("EaseRobotHeadTestNode: Waiting for action server to start");

// wait for the action server to start

ac_.waitForServer(); //will wait for infinite time

moving_ = false;

ROS_INFO("EaseRobotHeadTestNode: Action server started");

}

The function keyboardCallBack checks the received message and runs a test dependent on the key pressed.

It creates an instance of our action goal, sets the goal parameters, and passes it to the action server with a call to ac_.sendGoal. With the call, we pass three callback functions:

doneCBwhich is called when the action is completedactiveCBwhich is called when the action goes active andfeedbackCBwhich is called when the feedback on the progress of the action is received

The action can be pre-empted, so if the 'c' key is pressed and moving the head is in progress, we will cancel the action with a call to ac_.cancelGoal.

void EaseRobotHeadTestNode::keyboardCallBack(const keyboard::Key::ConstPtr& msg)

{

head_control::point_headGoal goal;

// Check for key 1 with no modifiers apart from num lock is allowed

if((msg->code == keyboard::Key::KEY_1) &&

((msg->modifiers & ~keyboard::Key::MODIFIER_NUM) == 0))

{

// Key 1, Test 1 move to max pan and tilt

goal.absolute = true;

goal.pan = max_pan_radians_;

goal.tilt = max_tilt_radians_;

// Need boost::bind to pass in the 'this' pointer

ac_.sendGoal(goal,

boost::bind(&EaseRobotHeadTestNode::doneCB, this, _1, _2),

boost::bind(&EaseRobotHeadTestNode::activeCB, this),

boost::bind(&EaseRobotHeadTestNode::feedbackCB, this, _1));

}

if((msg->code == keyboard::Key::KEY_2) &&

((msg->modifiers & ~keyboard::Key::MODIFIER_NUM) == 0))

{

// Key 2, test 2 move to min pan and tilt

goal.absolute = true;

goal.pan = min_pan_radians_;

goal.tilt = min_tilt_radians_;

// Need boost::bind to pass in the 'this' pointer

ac_.sendGoal(goal,

boost::bind(&EaseRobotHeadTestNode::doneCB, this, _1, _2),

boost::bind(&EaseRobotHeadTestNode::activeCB, this),

boost::bind(&EaseRobotHeadTestNode::feedbackCB, this, _1));

}

if((msg->code == keyboard::Key::KEY_3) &&

((msg->modifiers & ~keyboard::Key::MODIFIER_NUM) == 0))

{

// Key 3, test 3 move to pan 0, tilt 0

goal.absolute = true;

goal.pan = 0.0;

goal.tilt = 0.0;

// Need boost::bind to pass in the 'this' pointer

ac_.sendGoal(goal,

boost::bind(&EaseRobotHeadTestNode::doneCB, this, _1, _2),

boost::bind(&EaseRobotHeadTestNode::activeCB, this),

boost::bind(&EaseRobotHeadTestNode::feedbackCB, this, _1));

}

if((msg->code == keyboard::Key::KEY_4) &&

((msg->modifiers & ~keyboard::Key::MODIFIER_NUM) == 0))

{

// Key 4, test 4 move to pan 0, tilt -45 degress

goal.absolute = true;

goal.pan = 0.0;

goal.tilt = -0.785398;

// Need boost::bind to pass in the 'this' pointer

ac_.sendGoal(goal,

boost::bind(&EaseRobotHeadTestNode::doneCB, this, _1, _2),

boost::bind(&EaseRobotHeadTestNode::activeCB, this),

boost::bind(&EaseRobotHeadTestNode::feedbackCB, this, _1));

}

if((msg->code == keyboard::Key::KEY_5) &&

((msg->modifiers & ~keyboard::Key::MODIFIER_NUM) == 0))

{

// Key 5, test 5 move tilt up by 10 degrees

goal.absolute = false;

goal.pan = 0;

goal.tilt = -0.174533;

// Need boost::bind to pass in the 'this' pointer

ac_.sendGoal(goal,

boost::bind(&EaseRobotHeadTestNode::doneCB, this, _1, _2),

boost::bind(&EaseRobotHeadTestNode::activeCB, this),

boost::bind(&EaseRobotHeadTestNode::feedbackCB, this, _1));

}

if((msg->code == keyboard::Key::KEY_6) &&

((msg->modifiers & ~keyboard::Key::MODIFIER_NUM) == 0))

{

// Key 6, test 6 move pan by 20 anti-clockwise

goal.absolute = false;

goal.pan = 0.349066;

goal.tilt = 0;

// Need boost::bind to pass in the 'this' pointer

ac_.sendGoal(goal,

boost::bind(&EaseRobotHeadTestNode::doneCB, this, _1, _2),

boost::bind(&EaseRobotHeadTestNode::activeCB, this),

boost::bind(&EaseRobotHeadTestNode::feedbackCB, this, _1));

}

if((msg->code == keyboard::Key::KEY_7) &&

((msg->modifiers & ~keyboard::Key::MODIFIER_NUM) == 0))

{

// Key 7, test 7 move pan by 20 clockwise and tilt by 10 down

goal.absolute = false;

goal.pan = -0.349066;

goal.tilt = 0.174533;

// Need boost::bind to pass in the 'this' pointer

ac_.sendGoal(goal,

boost::bind(&EaseRobotHeadTestNode::doneCB, this, _1, _2),

boost::bind(&EaseRobotHeadTestNode::activeCB, this),

boost::bind(&EaseRobotHeadTestNode::feedbackCB, this, _1));

}

else if((msg->code == keyboard::Key::KEY_c) &&

((msg->modifiers & ~EaseRobotHeadTestNode::SHIFT_CAPS_NUM_LOCK_) == 0))

{

// Key 'c' or 'C', cancel action

if(moving_ == true)

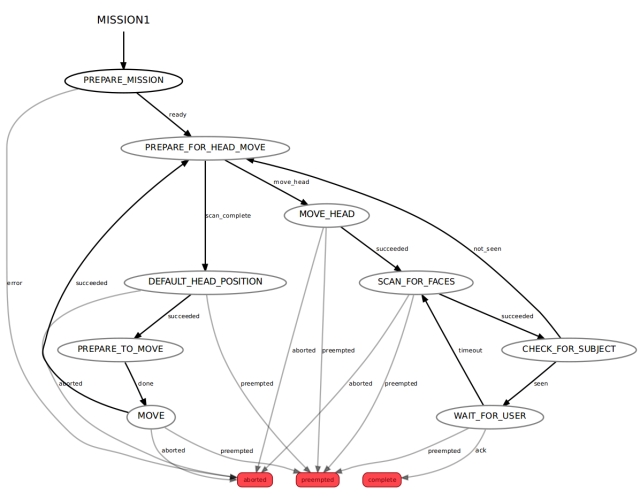

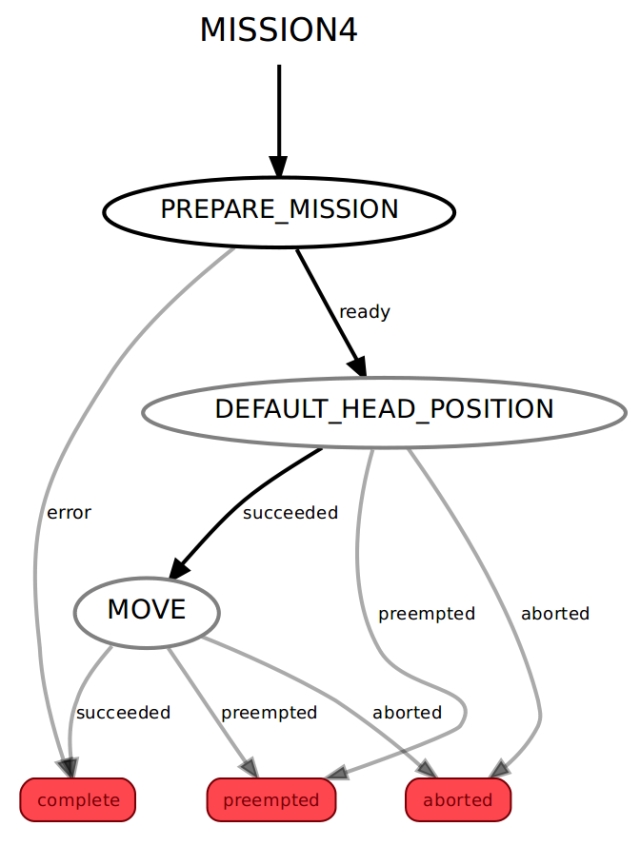

{